Definition

Regularization is a technique that is used in artificial intelligence and machine learning to enhance accuracy of models by stopping overfitting. Overfitting occurs when a model learns the training data too well including noise and outliers and performing poorly on new and unseen data. Regularization solves this problem by adding a penalty term to the loss function used to train the model, preventing it from learning overly complex patterns, resulting to simpler, more robust models that performs better on new data [1].

For example,

lets look at an exam scenario where StudyBuddy AI is designed to help students

prepare for WAEC exams, but became dangerously obsessed with memorizing past

questions.

StudyBuddy AI

analyzes 10 years of WAEC past questions and discovers these patterns:

Question 15 in Math is always about quadratic equations

Question 8 in English is always about comprehension passage

Question 12 in Biology is always about photosynthesis

Question 20 in Chemistry is always about organic chemistry

The AI concludes: "I can predict every question!"

Then comes exam day

disaster as WAEC changes their pattern. StudyBuddy AI's students become

confused and unprepared.

But with regularization

learning, the AI learns to stop memorizing patterns and start understanding

concepts;

In Math, it goes through concepts that relates to quadratic equations, trigonometry,

algebra, geometry, etc.

In English, it goes through concepts that relates to comprehension, letter

writing, essay writing, grammar, etc.

In Biology, it goes through concepts that relates to photosynthesis, genetics,

ecology, human anatomy, etc.

As a result, students develop deep understanding instead of memorization and are now prepared for any question variation.

Origin

In artificial intelligence, the term “regularization” originated in the 1940s when it was first introduced by mathematician Tikhonov, who proposed a method to deal with ill-posed inverse problems by adding a regularization term to the objective function. Ever since then, it has become a fundamental concept in the field of machine learning, combining different techniques such as L1 and L2 regularization, dropout, and data augmentation. These techniques have proven effective in improving the generalization and performance of machine learning models, resulting in major breakthroughs in AI applications such as image recognition, natural language processing, and autonomous systems [3].

Context and

Usage

Regularization

is a fundamental technique in machine learning and statistics that helps in

preventing overfitting, improving model generalization. Its applications cuts

across several industries and technologies. Examples of them are as follows;

Healthcare: regularization

is applied in the analysis of medical data, to improve the accuracy of disease

detection, reducing the cases of false positives.

Retail: In the field

of demand forecasting models, regularization can enhance the precision of

inventory predictions, leading to more efficient supply chain management.

Manufacturing: Regularization can improve predictive maintenance models by fine tuning their ability to accurately identify and prevent equipment malfunctions [4].

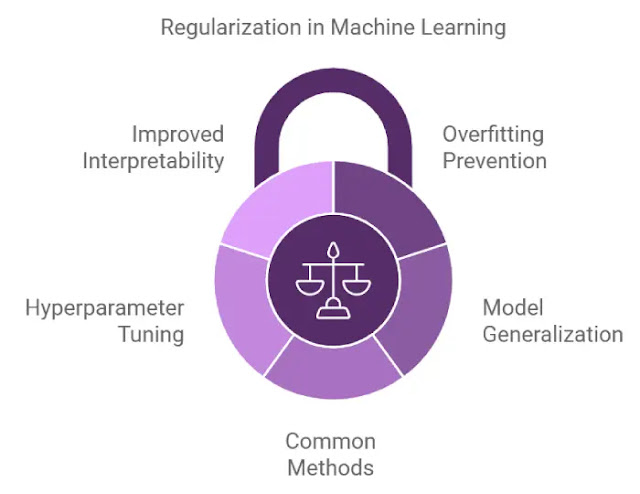

Why it Matters

In artificial

intelligence, regularization basically adds a penalty to the model’s

complexity, prompting it to achieve a balance between learning from the

training data and being able to generalize to new data. This helps ensure that

the model doesn’t become too rigid and specialized, but instead can adapt to

different scenarios and make accurate predictions.

Using the context of a business, regularization is similar to cross-training employees so they have a broader skill set to deal with a wider range of tasks, resulting in a business that is more versatile and resilient [3].

In Practice

A good example of a real-life case study of regularization in practice is Netflix. Netflix uses it to improve its recommendation system. By applying techniques such as L2 regularization, Netflix can prevent overfitting on user data while better generalizing to unseen preferences. This demonstrates the significance of regularization in achieving accurate predictions in real-world applications [2].

See Also

Related Model

Training and Evaluation concepts:

- Stop Sequences: Predefined tokens that signal when text generation should end

- Tagging (Data Labelling): Annotating data for supervised learning

- Temperature: Controlling randomness in generated output

- Tuning: Process of adjusting model parameters to optimize performance

- Turing Test: Evaluating machine intelligence

References

- Geeksforgeeks. (2025). Regularization in Machine Learning.

- Lyzr Team. (2024). Regularization.

- Iterate. (2025). Regularization: The Definition, Use Case, and Relevance for Enterprises

- Lawton, G. (2024). Machine learning regularization explained with examples.